Efficiency is a core attribute of high performance, scalable APIs. As developers, we’re tasked with maximizing throughput and responsiveness while minimizing infrastructure costs. At times it can be a challenge, but striking the right balance rewards us with loyal users and happy stakeholders. The universe of applications is diverse and there are many ways to optimize around efficiency; we’ll focus here on an area of relevance to many APIs: I/O.

I/O can be characterized as the interaction between an application and some external resource: a relational or NoSQL data store, a REST API, even just a file on disk. Applications spend most of their lives in one of two modes: I/O-bound, where they’re limited by the responsiveness of an external resource, or CPU-bound, where they’re limited by the speed of the local CPU. For this discussion, we’re interested in applications living primarily in the I/O-bound realm.

Blocking I/O

In a traditional synchronous programming model, once an application thread makes an I/O request it halts execution while it waits for the response. We refer to this as “blocking I/O” because the application thread “blocks” while waiting for I/O operations to complete. The fastest operations might complete in a handful of microseconds, but it’s far more common for these operations to stretch into millisecond or even full second timeframes, depending on the nature of the operation and the transport between the two. The operation could involve a trivial, constant time lookup in a key-value store or a complex SQL query joining a dozen large tables. It could be communicating with another process running on the same server instance or a remote API hosted halfway across the planet. To offset the wait times introduced by these I/O operations, developers will leverage multithreading and multiprocessing to run many “copies” of an application in parallel; this way, one blocked thread won’t directly block the execution of other threads.

At small scale, dedicating a thread or a process to each incoming API request can be a workable solution, but when the concurrent request count grows into the hundreds, thousands, or even tens of thousands, this strategy breaks down. Threads and processes introduce resource demands of their own (specifically, memory usage and context switching) as well as complexities with shared objects and application state. Some languages impose even further restrictions. For example, Python supports the concept of threads but is not truly multi-threaded due to implementation details within its core (see Python GIL).

Combined, these characteristics can deal crippling blows to API scalability. Fortunately, there’s an alternative programming model designed to address these issues, and it’s been gaining momentum across major development platforms over the last few years.

Non-Blocking I/O

As its name suggests, non-blocking I/O is a programming model where code does not wait for an I/O operation to complete before continuing execution. After making a request, the code continues its execution, performing pending work that doesn’t depend on the response to that particular request. A common implementation of this model uses callback functions and an event loop. When requesting an I/O operation, the application registers its interest in the response by providing a function to execute against the resulting value – this is the “callback” function. An event loop sits beneath the application code, largely invisible, managing the event queue and invoking callbacks in response to completed I/O operations. This programming model is commonly referred to as “asynchronous” or “event-driven”, owing to its non-linear execution and use of event loops under the hood. In a moment we’ll look at how this model can bring the concurrency benefits of multithreading without the heavy resource overhead and data synchronization drawbacks.

[Further reading: An Introduction to Asynchronous Programming and Twisted is an excellent series of articles offering a primer on asynchronous programming concepts centered around Python’s Twisted framework.]

An Example

Let’s look at how non-blocking I/O can be advantageous to the efficiency of an API. Assume we have an API responsible for retrieving a customer’s order history. This API typically completes in 100ms, broken down as follows:

- 20ms for authentication, authorization, validation, and business logic (CPU-bound)

- 60ms waiting on database results (I/O-bound)

- 20ms to construct and serialize the response (CPU-bound)

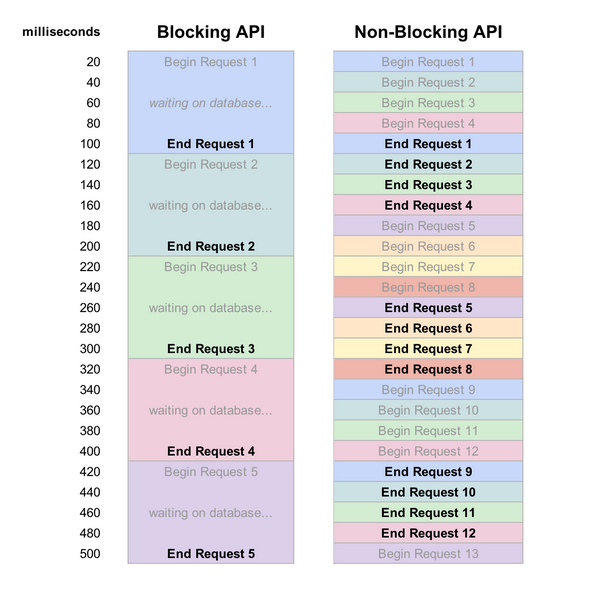

So, 40% of the time is spent in CPU-bound processing and the remaining time is spent waiting on database I/O. The following diagram shows how the first 500 milliseconds (ms) of single threaded execution might look under blocking and non-blocking I/O models:

With these results, we can make a few observations:

The Non-Blocking API provided higher throughput and lower response times. In fact, we’d need 3 threads of the Blocking API to exceed the throughput of a single thread of the Non-Blocking API. For many APIs, the percent of time spent waiting on I/O is even higher than in this contrived example, furthering the potential benefit of a non-blocking model. While many non-blocking platforms discourage multithreading, it’s common to run several independent processes of a single-threaded, non-blocking application to take advantage of modern multi-core hardware. Each process may come close to fully saturating a single CPU core during periods of sustained heavy load.

It’s important to understand that non-blocking behavior will not make an individual request execute any faster start-to-finish. In the previous example, each request stills take a minimum of 100 ms. In fact, an asynchronous model will often slightly extend the start-to-finish time of individual requests due to other requests being interleaved during the waiting-on-I/O periods, but the async model is still a large net gain due to the greatly diminished time that requests sit “in queue”, waiting to even be started. For example, Request 4 doesn’t start processing until the 300 ms mark in the Blocking API. In the Non-Blocking API, it starts at the 60 ms mark and has completed by the 160 ms mark. Even if other in-progress requests delay its completion by 100 ms (causing it to finish at the 260 ms mark), it would still complete before it had even started under the Blocking API.

Real World Results

Let’s move beyond hypotheticals and look at results from a couple production APIs we’ve developed for our customers.

The first is a Twisted (Python) API using Redis as a cache for dynamically generated image data. The API runs in a process-per-core configuration on compute-optimized 8-core Amazon Web Services instances, with Redis centrally hosted on a general purpose dual-core instance. Under cache-heavy scenarios, a single API instance can serve in excess of 1,250 fully authenticated and authorized requests per second (RPS) with response times under 100 milliseconds. In one of our larger testing scenarios, we scaled out to eight API instances and measured throughput just beyond 10,000 RPS with around 60 millisecond response time. At this level of load we became constrained by the network interface of our modestly-sized Redis instance, necessitating a simple instance upgrade (for more extreme scalability scenarios, a data sharding implementation may become necessary). Python is far from the most efficient platform available, but we’ve been pleased with the level of performance achieved through a non-blocking framework like Twisted and a lightning-fast data store like Redis.

Another API uses a different set of technologies to reach even greater levels of efficiency. This API utilizes the high performance, non-blocking nginx web server as a caching proxy for a private data store hosted in Amazon S3. Using its inline Lua scripting support, we’re able to authenticate inbound API requests and compute AWS Signatures for upstream S3 requests completely within nginx. This API tier consists of general purpose dual-core instances in an Auto Scaling Group behind an Elastic Load Balancer, so as the inbound request load increases, new instances are automatically provisioned to handle it. With nginx’s content caching disabled (in other words, reaching out to S3 on every request), an instance can serve around 1,200 RPS with 40 millisecond response times. Enabling nginx content caching helps boost throughput to over 1,800 RPS with bursts over 2,500 RPS. The higher complexity and larger file sizes of this API cause us to hit per-instance network bottlenecks more quickly than the other API, but its horizontally scalable design combined with the powerful capabilities of S3 ensures that we can adapt to the demand and serve a very high request volume while keeping infrastructure costs low.

Wrapping Up

Platforms supporting non-blocking I/O are becoming increasingly common. Node.js is a modern, popular JavaScript-based platform designed from the ground up to be event-driven and non-blocking. It is somewhat unique in this regard, as many non-blocking platforms are built upon languages that have traditionally been used in blocking fashion, such as Java NIO, Twisted (Python), Cramp (Ruby), and async/await in .NET. Some platforms do an admirable job of hiding the complexities typically associated with coding in this style, but there’s often still a learning curve as developers shift their mindsets from synchronous to asynchronous application design.

Non-blocking models don’t always make sense, especially for applications leaning heavily on CPU-bound operations. As with any solution, it’s important to instrument the applications and analyze their behavior under the stress of large-scale load testing. Some APIs will end up as a combination of lightweight I/O-bound tiers and heavy CPU-bound tiers coordinated through message queues or pub/sub channels. In software, there’s never a one-size-fits-all architecture, but embracing non-blocking I/O patterns can often vastly improve the efficiency of I/O-bound application tiers and cement their role as high performance, low cost components of a scalable solution.